Large language models (LLMs) have set the world alight, showing clear use cases for machine intelligence. But what exactly are they, what are they not, and how do they work?

No-one can have failed to notice that generative artificial intelligence (AI), specifically large language models, have been making waves in the tech industry.

But what exactly are generative AIs?

Put simply, generative AI is a type of artificial intelligence that can generate human-like text, given some input. These models are trained on a diverse range of internet text, but they do not know specifics about which documents were part of their training set or have access to any personal data unless explicitly provided in the conversation.

Large language models like GPT-4, developed by OpenAI, and Llama, developed Meta AI, are capable of understanding and generating human language in a way that is incredibly advanced. They can answer questions, write essays, summarise long documents, translate languages, and even create software code.

These models work by predicting the next word in a sentence. They take all the previous words in that sentence as input and predict what word comes next. This is done over and over again until a full sentence or paragraph is formed.

What they are not

While these models are powerful, they have real limitations. For example, while they can write, they don’t have beliefs or desires. They don’t understand the world or have common sense. They can’t access or retrieve personal data unless it has been shared with them during the conversation. They generate responses based on patterns they learned during training and do not have the ability to independently think or feel.

How they are used

While a lot of people have had fun playing with ChatGPT, generative AI is more than a parlour trick. Large language models can be incorporated into various business scenarios:

- Finance

In finance, these models can be used to read and understand financial reports, news articles, and social media posts to predict stock market trends. They can also assist in automating customer service by answering queries about account balances, transaction histories, and more.

- Healthcare

In healthcare, they can help doctors by pulling out relevant medical information from patient records, research papers, or clinical guidelines. They can also assist patients by answering health-related queries or explaining complex medical terms in simple language.

- Retail

In retail, these models can be used to automate customer service inquiries, provide personalised shopping recommendations based on customer preferences or past purchases, and even generate product descriptions.

- Manufacturing

In manufacturing, these models can assist in analysing and predicting equipment failures by understanding maintenance reports and logs. They can also help in automating the documentation process.

Technical implementation: an approach and example

Implementing large language models into your business involves integrating the model’s API into your existing systems. The model itself is hosted on Microsoft’s Azure cloud and accessed via an API call.

Crucially, this means you don’t need to worry about the computational resources required to run such a large model; Azure handles all of that for you.

The typical solution architecture would involve your application sending a prompt to the model’s API and then receiving a response. This could be done directly from your application or via an intermediate service that handles additional business logic.

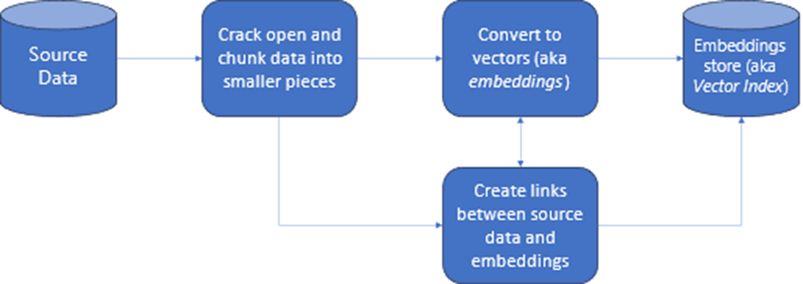

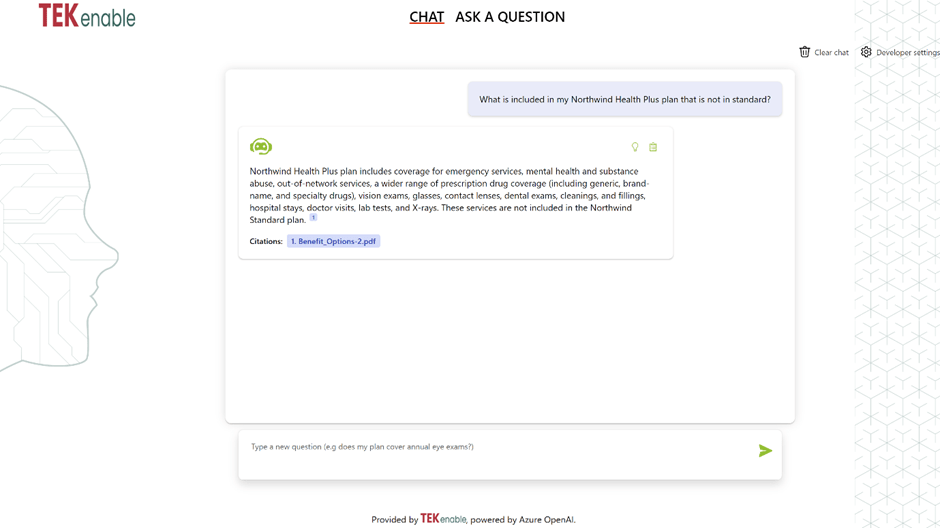

Retrieval Augmented Generation (RAG) is a pattern that collaborates with pretrained Large Language Models (LLM) and your specific data to produce responses.

RAG is a strategy in information retrieval that allows you to leverage the capabilities of LLMs in conjunction with your own data.

The process of enabling an LLM to utilize custom data involves several steps. Initially, the large dataset needs to be broken down into smaller, more manageable segments. These segments then need to be transformed into a format that can be easily searched.

Once converted, this data must be stored in a location that facilitates quick and efficient access. It’s also crucial to preserve pertinent metadata for use in citations or references when the LLM generates responses.

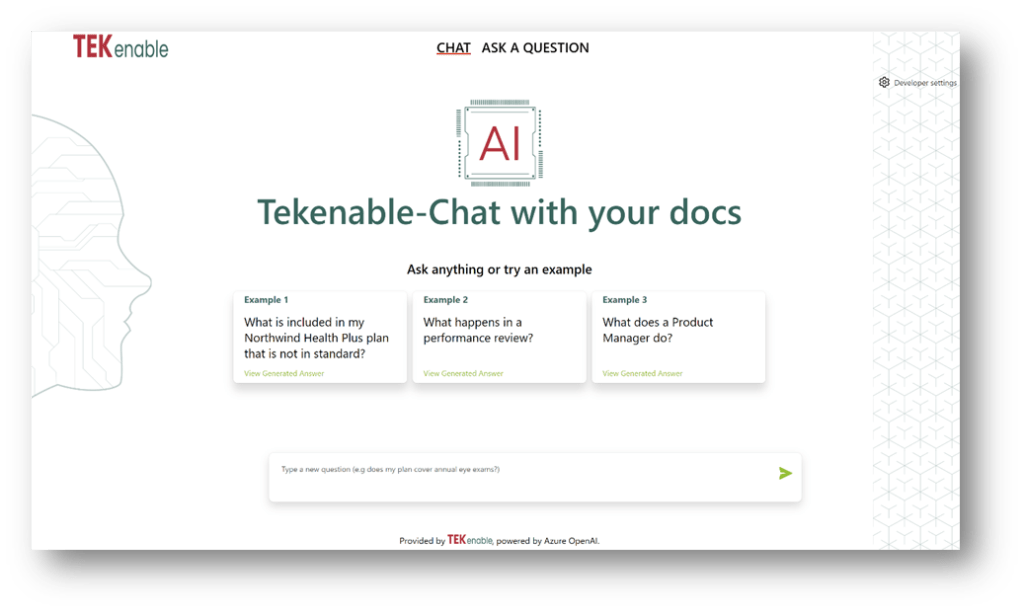

For example, in a customer service application, when a customer asks a question, your application would send that question as a prompt to the model’s API. The API would return a generated response which your application could then display back to the customer.

It’s important to note that while these models can generate very human-like text, they should not be used to make decisions on their own. The generated text should always be reviewed or moderated before being used in a critical business process.

One of the key advantages of these models is their ability to work with both structured and unstructured data.

For instance, if you have a database of customer interactions (structured data), you could use this model to analyze those interactions and generate insights about common customer issues or questions.

Similarly, if you have a collection of customer reviews or feedback (unstructured data), you could use this model to understand common themes or sentiments expressed by your customers.

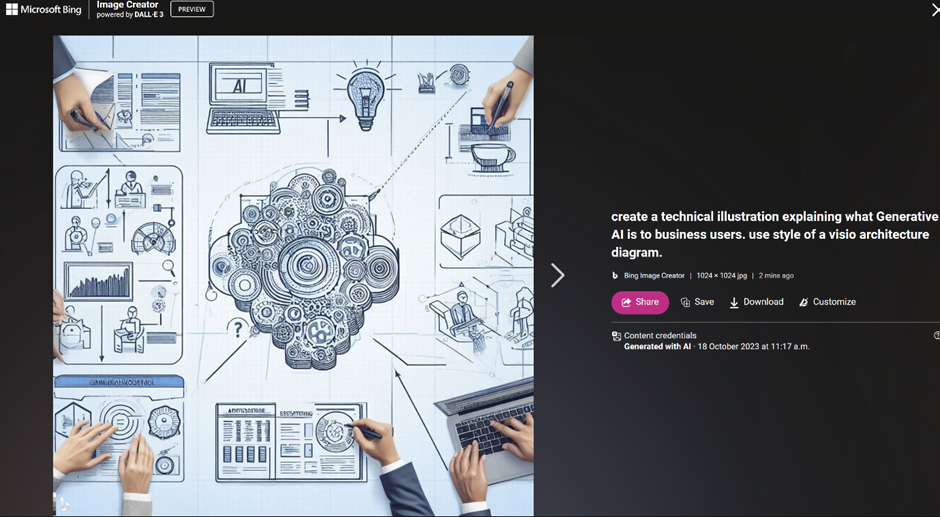

Here is an example of prompting the DALL-E model embedded in Bing Image Creator service. I could have spent an hour to find or make an illustration, instead I asked the generative AI model to draw me the illustration based on a simple natural language prompt.

This ability to work with both structured and unstructured data makes these models incredibly versatile and valuable across different industries. It allows businesses to leverage their existing data in new and innovative ways, leading to significant productivity gains.

Azure AI: Data Privacy and Governance

Microsoft Azure provides organisations with an opportunity to adopt large language models like OpenAI’s GPT or Meta AI Llama within their own ‘tenant’. This means that all data processed by these models stays within the organisation’s control, significantly reducing data privacy concerns.

By hosting these models within their own Azure tenant, organisations can ensure that all regulatory requirements are met. This includes compliance with regulations like GDPR for data privacy and HIPAA for healthcare information.

Moreover, Azure provides robust governance tools that allow organizations to monitor how these models are being used. This includes tracking who is accessing the model, what prompts are being sent to it, and what responses it is generating.

This level of control and visibility is crucial for organisations operating in highly regulated industries like finance or healthcare where misuse of data can lead to significant penalties.

By adopting large language models within their own tenant using for example Azure OpenAI services, organisations can leverage the power of these models while ensuring that they remain in control of their data.

Azure OpenAI Studio

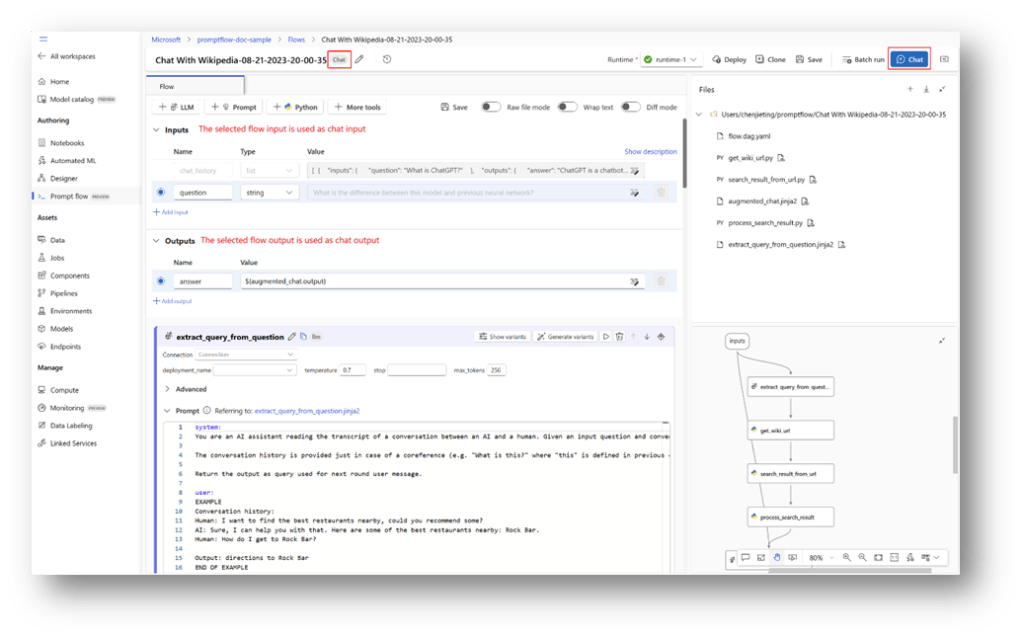

Azure OpenAI Studio is a comprehensive tool that allows organisations to build, train, and deploy large language models within their own Azure tenant. It provides a user-friendly interface for managing all aspects of the model lifecycle, from data ingestion and model training to deployment and monitoring.

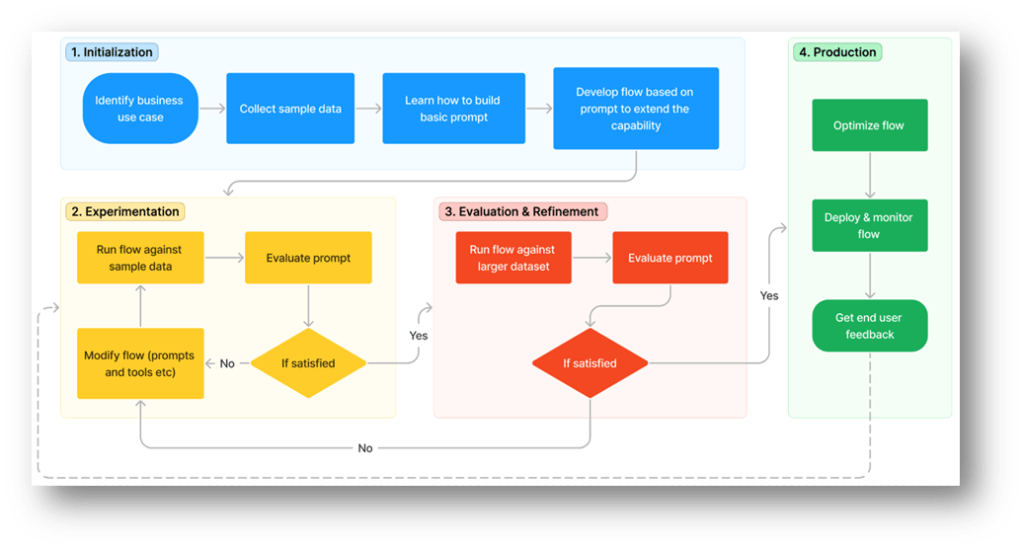

One of the key features of Azure OpenAI Studio is the Prompt Flow. This feature allows users to create a sequence of prompts that guide the model’s responses. This can be particularly useful in customer service scenarios where the model needs to ask a series of questions to gather the necessary information from the customer.

Prompt flow’s systematic and orderly process allows you to confidently build, thoroughly examine, refine, and launch flows. This leads to the development of resilient and advanced AI applications.

Another important feature is AI Safety and Moderation. This feature allows organisations to set rules and guidelines for what the model can and cannot say. This can help ensure that the model’s responses are always appropriate and in line with the organisation’s policies.

By using Azure OpenAI Studio, organisations can not only adopt large language models within their own tenant but also have full control over how these models are used and what they generate.

Conclusion: AI in Azure is a boon to business

Incorporating Azure OpenAI services into your business can lead to significant improvements in efficiency and customer satisfaction. By understanding what large language models are capable of, you can better strategize how to utilize them in your specific industry context. With Azure OpenAI, you can do so while ensuring that your data remains private and secure.