Welcome back to our enlightening journey through the realm of Generative AI. As we venture into the third part of this series, we will unravel the mysteries of driving large language models, the art of fine-tuning them on Azure, and the importance of a robust security model.

In the previous parts, we explored the fundamentals of large language models and their applications, such as Microsoft’s CoPilot. Now, we will delve deeper into the practical aspects of these models, focusing on how to effectively use and manage them in a business environment.

Driving Large Language Models: Prompt Engineering

Prompt engineering is not just a technique, but a key skill that unlocks the full potential of large language models. It’s akin to learning the controls of a powerful sports car. The car has the capability to deliver high performance, but to truly leverage its power, you need to understand how to operate it effectively.

In the context of large language models, the model is the sports car, and prompt engineering is the driving skill. The better your understanding and command of prompt engineering, the more effectively you can harness the power of the model.

For instance, consider a scenario where you want the model to generate a list of innovative marketing strategies. A simple prompt like “marketing strategies” might result in a generic list. But with effective prompt engineering, you can guide the model to generate a more specific and useful output. For example, you could use a prompt like “Here are some innovative marketing strategies for a startup in the renewable energy sector:”. This prompt sets a clear context and guides the model to generate a list of strategies specifically tailored to startups in the renewable energy sector.

But prompt engineering goes beyond crafting effective prompts. It’s also about structuring the conversation with the model, especially in multi-turn interactions. For example, including the conversation history in your prompt can provide the necessary context for the model’s responses, leading to more coherent and relevant outputs.

As organizations adopt large language models, training business users in prompt engineering becomes crucial. Just as you would train employees to use a new software tool, training them in prompt engineering can help them more effectively leverage the power of large language models. This training could involve understanding the behaviour of the model, learning how to craft effective prompts, and practicing through hands-on exercises.

The beauty of prompt engineering is that it allows you to customize the model’s output to suit your specific needs. Whether you’re generating content for a blog post, drafting an email, or creating responses for a chatbot, prompt engineering gives you the control to shape the model’s output, making it a powerful tool in your AI toolkit.

Prompt Engineering is a critical skill in the era of large language models. By mastering this skill, organizations can fully leverage the power of these models, leading to more effective and impactful applications of AI.

What is Fine-Tuning and How Does it Work on Azure?

Fine-tuning is a powerful technique that tailors pre-trained models to better suit specific tasks or domains. It involves additional training of the pre-trained model on a specific dataset, enabling the model to specialize and improve its performance on that task.

Azure provides a robust and user-friendly platform for fine-tuning Azure OpenAI models. Fine-tuning Azure OpenAI models involves several best practices to ensure optimal results:

1. Data Preparation:

The first step in fine-tuning is preparing your dataset. This involves curating a dataset that closely aligns with the task you want the model to excel at. Prepare your training and validation datasets carefully. The quality and relevance of your data can significantly impact the performance of the fine-tuned model

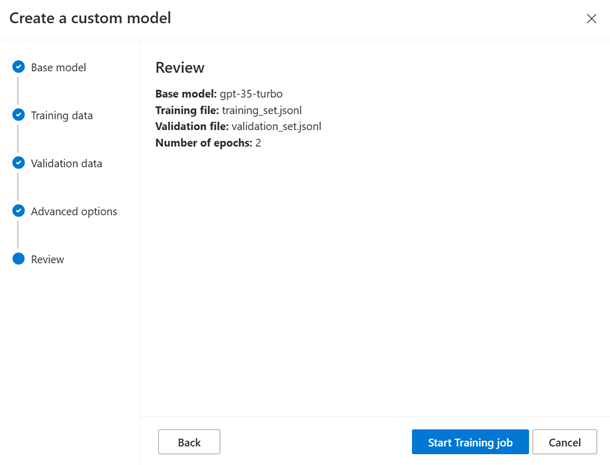

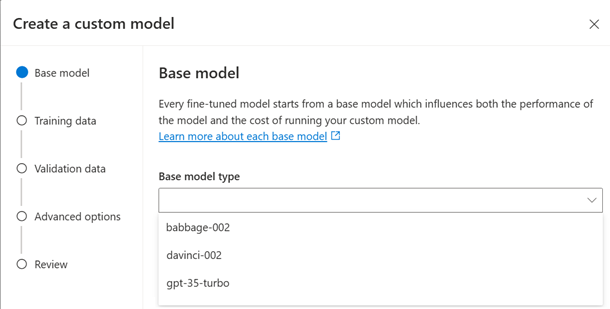

2. Fine-Tuning Workflow:

Follow the recommended workflow for fine-tuning in Azure OpenAI Studio. This includes preparing your data, training your custom model, deploying the model, and analyzing its performance

- Model Selection: Choose the right base model for fine-tuning. Azure OpenAI supports fine-tuning of several models including gpt-35-turbo-0613, babbage-002, and davinci-002

3. Prompt Engineering:

Even with fine-tuning, prompt engineering remains important. Fine-tuning can improve the model’s performance on specific tasks, but effective prompts can help guide the model to generate the desired output

4. Cost Management:

Be aware of the costs associated with fine-tuning. This includes the costs of training, inference, and hosting a fine-tuned model. Make sure to delete your fine-tuned model deployment when you’re done to avoid incurring unnecessary costs

5. Security and Compliance:

Ensure that your use of Azure OpenAI complies with all relevant security and compliance requirements. Azure provides a robust security model and adheres to over 90 compliance certifications

Remember, fine-tuning is a powerful tool, but it’s not always necessary. In many cases, you can achieve great results with prompt engineering alone. Fine-tuning should be considered when you need to set the style, tone, format, or other qualitative aspects, improve reliability at producing a desired output, correct failures to follow complex prompts, handle many edge cases in specific ways, or perform a new skill or task that’s hard to articulate in a prompt.

What Security Threats Do LLM Models Face?

Fine-tuned models, like all AI models, can be susceptible to various security threats. Here are some common ones:

1. Prompt Injections:

This involves bypassing filters or manipulating the model using carefully crafted prompts that make the model ignore previous instructions or perform unintended actions². These vulnerabilities can lead to unintended consequences, including data leakage, unauthorized access, or other security breaches.

2. Data Leakage:

A common concern is that a model might ‘learn’ from your prompts and offer that information to others who query for related things. Or the data that you share via queries is stored online and may be hacked, leaked, or more likely, accidentally made publicly accessible.

3. Inadequate Sandboxing and Unauthorized Code Execution:

It is a security threat that can occur when a large language model (LLM) is not properly isolated when it has access to external resources or sensitive systems. This lack of proper isolation, or “sandboxing”, can lead to potential exploitation and unauthorized code execution. These vulnerabilities highlight their potential impact, ease of exploitation, and prevalence.

To mitigate these threats, it’s important to implement strict input validation and sanitization for user-provided prompts, use context-aware filtering and output encoding to prevent prompt manipulation, and regularly update and fine-tune the model to improve its understanding of malicious inputs and edge cases. Additionally, ensuring that your use of Azure OpenAI complies with all relevant security and compliance requirements is crucial.

When deploying AI models, ensuring a robust security model is paramount. This is especially true when the models are designed to interact with sensitive enterprise data. Azure provides a comprehensive security model to protect your data and models.

Azure Security Model

In the context of large language models, it’s important to note that these models do not have access to any specific documents, databases, or proprietary information unless explicitly provided in the prompt by the user. They generate responses based on a mixture of licensed data, data created by human trainers, and publicly available data.

When using enterprise data for prompt responses, Azure ensures that the data is used in a secure and compliant manner. The models do not store personal data from the prompts or the generated responses. Furthermore, Azure has strict guidelines and mechanisms in place to ensure that the use of data aligns with its terms of use and privacy policies.

By leveraging Azure’s robust security model, you can deploy your large language models with confidence, knowing that your data and models are secure.

Azure Security & Compliance Best Practices for LLMs

Ensuring the security and compliance of your fine-tuned model involves several best practices:

1. Data Protection:

Azure OpenAI ensures that your prompts (inputs) and completions (outputs), your embeddings, and your training data are not available to other customers, OpenAI, or used to improve OpenAI models². Your fine-tuned Azure OpenAI models are available exclusively for your use.

2. Security Best Practices:

Follow Azure’s security best practices when designing, deploying, and managing your cloud solutions¹. This includes data encryption, network security, and access control.

- Compliance: Ensure that your use of Azure OpenAI complies with all relevant security and compliance requirements. In addition, Azure adheres to over 90 compliance certifications, including GDPR, HIPAA, and ISO 27001, ensuring that your AI deployments meet the highest standards of security and compliance.

- Visibility and Control: Ensure single-pane visibility into your Azure resources and manage their security posture.

- Identity Management: Enable identity as a security perimeter to protect both your data plane and cloud-control plane.

- Security Score: Leverage tools that quantify your security posture through an actionable security score.

Remember, security and compliance are ongoing processes. Regularly review and update your security practices to address evolving threats and changes in your business needs.

AI Safety and Content Filtering

AI safety and content filtering are crucial aspects of deploying AI models, especially when these models interact with sensitive data or are exposed to a wide audience. Azure provides several options to ensure AI safety and content filtering.

Azure AI Content Safety

Azure AI Content Safety is a content moderation platform that uses AI to keep your content safe. It uses advanced AI-powered language and vision models to monitor text and image content for safety.

Language models analyse multilingual text, in both short and long form, with an understanding of context and semantics. Vision models perform image recognition and detect objects in images using state-of-the-art Florence technology. AI content classifiers identify sexual, violent, hate, and self-harm content with high levels of granularity.

Azure OpenAI Service Content Filtering

Azure OpenAI Service includes a content filtering system that works alongside core models. This system works by running both the prompt and completion through an ensemble of classification models aimed at detecting and preventing the output of harmful content. The content filtering system detects and takes action on specific categories of potentially harmful content in both input prompts and output completions⁷.

The content filtering system integrated in the Azure OpenAI Service contains neural multi-class classification models aimed at detecting and filtering harmful content; the models cover four categories (hate, sexual, violence, and self-harm) across four severity levels (safe, low, medium, and high).

Examples of Azure AI Safety Options

Here are a few examples of how Azure AI safety options can be used:

- Online Marketplaces: Azure AI Content Safety can be used to moderate product catalogs and other user-generated content.

- Gaming Companies: Azure AI Content Safety can moderate user-generated game artifacts and chat rooms.

- Social Messaging Platforms: Azure AI Content Safety can moderate images and text added by their users.

- Enterprise Media Companies: Azure AI Content Safety can implement centralized moderation for their content.

- K-12 Education Solution Providers: Azure AI Content Safety can filter out content that is inappropriate for students and educators.

By leveraging Azure’s robust AI safety options, you can ensure that your AI models are used responsibly and for their intended purposes.

Conclusion

In the realm of generative AI, mastering the art of prompt engineering, fine-tuning models on Azure, and ensuring a robust security model are crucial steps in harnessing the power of large language models.

Prompt engineering allows you to guide the model’s output, making it a powerful tool in your AI toolkit. Fine-tuning on Azure enables you to specialize your models for specific tasks, leading to more accurate and contextually relevant results.

But beyond these, the importance of a robust security model cannot be overstated. Ensuring that your models are secure and compliant is paramount in today’s digital age. Azure’s comprehensive security model, coupled with its AI safety options, provides a secure environment for deploying your models.

Whether you’re generating content for a blog post, drafting an email, or creating responses for a chatbot, these practices give you the control to shape the model’s output and ensure its safe use.

As we continue to explore the exciting world of generative AI, remember that the power of these models lies not just in their capabilities, but also in how effectively and responsibly we use them. Stay tuned for the next instalment in our series, where we will delve deeper into this fascinating field. Until then, happy experimenting!

Frequently Asked Questions

What is prompt engineering in large language models?

Prompt engineering is the process of designing specific inputs to guide an AI model’s responses, making it more relevant and accurate for various tasks.

How does prompt engineering improve AI model performance?

It enhances model performance by providing clear guidance, which helps the model generate precise, context-specific outputs.

What is fine-tuning in large language models?

Fine-tuning is an additional training step that customizes a pre-trained model for a specific task or domain, improving its performance and relevance.

How do you fine-tune an AI model on Azure?

Fine-tuning on Azure involves data preparation, following Azure’s fine-tuning workflow, model selection, prompt engineering, cost management, and adhering to security and compliance guidelines.

What security threats do large language models (LLMs) face?

Common threats include prompt injections, data leakage, and inadequate sandboxing. These risks can be mitigated with input validation, context-aware filtering, and regular model updates.

How does Azure help ensure security and compliance in AI models?

Azure provides a robust security model that includes data encryption, network security, compliance certifications like GDPR and HIPAA, and AI content safety options, ensuring that AI deployments meet high security and ethical standards.

What is Azure’s AI Content Safety?

Azure’s AI Content Safety is a content moderation platform that monitors and filters text and image content for harmful material, supporting responsible AI use in environments like social media, gaming, and educational platforms.

How does prompt engineering differ from fine-tuning?

Prompt engineering adjusts how a user interacts with a model to achieve desired outputs, while fine-tuning involves additional training of the model on task-specific data to improve performance in a particular domain.